Revisiting knowledge elicitation & Bayesian reasoning for national security decisions

In a previous post, I argued that national security today is less about accumulating information and more about anticipating how situations and threats are likely to develop over time. Intelligence advantage is no longer defined by the volume of data you collect, but by the ability to organize uncertainty, weigh competing explanations, and support timely decisions despite incomplete or contested information. In this post, I apply that argument by revisiting a high-level analytics seminar I attended in 2018, which illustrated how states can reason systematically about emerging threats even when intelligence is fragmented, degraded, or deliberately distorted by adversaries (as is often the case).

INTELLIGENCEGENERAL TOPICSNATIONAL SECURITYSOCIAL COMMENTARYSCIENCE & TECHNOLOGY

A Quiet Warning

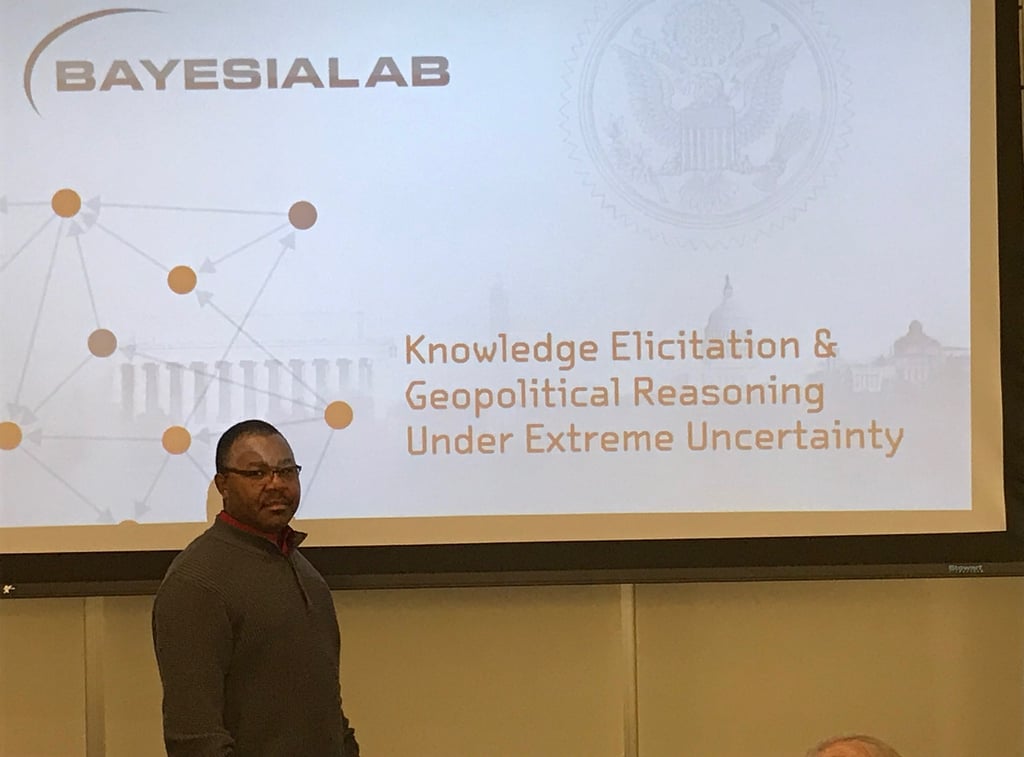

On December 3, 2018, I was invited to a high-level intelligence workshop on Knowledge Elicitation & Reasoning with Bayesian Networks for Policy Analysis, held at the Virginia Tech Applied Research Center in Arlington, Virginia, USA. The composition of the audience was revealing: intelligence officers, military strategists, counterterrorism analysts, decision scientists, risk analysts, and wargaming professionals associated with the United States, France, and Israel—states that are associated with the world’s most sophisticated intelligence systems and threat analytic rigor.

The workshop addressed a persistent national security dilemma: how to make high-stakes decisions when data are incomplete, deceptive, or unavailable. Rather than defaulting to intuition or waiting for perfect intelligence—which rarely arrives—we focused on building Bayesian Network (BN) models directly from expert judgment.

A Bayesian Network is a scientific decision model that combines human expertise with probability and causal reasoning, drawing from fields such as computer science and artificial intelligence, probability theory and statistics, and graph theory. In practical terms, it maps how key factors in a complex problem influence one another and assigns probabilities to those relationships, allowing analysts to reason systematically about uncertainty and test “what-if” scenarios before real-world decisions are made. In essence, these models explicitly encode variables, causal relationships, and uncertainty, allowing leaders to simulate interventions and assess risk trajectories before committing resources or forces.

Turning Expert Judgment into Strategic Warning

Every intelligence system relies on human judgment. The failure occurs when that judgment remains informal, unstructured, and unverifiable. The workshop demonstrated how tacit expertise—held by analysts, operators, and regional specialists—can be transformed into computational, auditable models capable of supporting national security decisions.

A critical enabler was the Bayesia Expert Knowledge Elicitation Environment (BEKEE). Unlike traditional briefings or consensus meetings, BEKEE captures individual expert assessments independently, reducing groupthink, hierarchy distortion, and political pressure. This is not a marginal improvement; it directly affects whether early warnings survive bureaucratic and political filtering.

The deeper strategic value is this: Bayesian reasoning allows nations to preserve uncertainty rather than suppress it. Instead of false precision or vague warnings, leaders receive probabilistic assessments that show why a threat is rising, which drivers matter most, and where intervention is likely to succeed or fail.

Serious National Security Applications—not Theory

The cases we explored during the seminar focused on military mission planning, insurgency evolution, counterterrorism, espionage detection, and policy intervention analysis. We did not simply do academic exercises; we addressed the core problems national leaders face when confronting gray-zone conflicts, proxy warfare, and internal destabilization.

I could help evaluating some African cases—Nigeria, Libya, and Malawi during and after the workshop—not because these countries were unique, but because they illustrated a universal pattern: strategic warnings existed, but leadership failed to acknowledge their existence, let alone, act on them. The reasons for that failure are not technical. They are institutional and political.

The Bottom-line: Strategic Surprise Begins in the Analytic Layer

National security disasters rarely originate on the battlefield. Regimes do not fail overnight. They begin earlier—much earlier—in the analytic layer—when uncertainty is ignored, expertise is informal, and warning signals are dismissed because they lack numerical certainty. While Bayesian reasoning does not eliminate uncertainty, it forces leaders to confront uncertainties before adversaries exploit them.

The lesson from 2018 remains stark: states that systematize expert analysis gain time, options, and leverage. States that do not do so eventually face crises they can neither explain nor control. That distinction becomes even more consequential when we examine leadership resistance to such methods.

At an intelligence analysis workshop at the Virginia Tech Applied Research Center in Arlington, Virginia, USA